Introduction

I’ve been building a fairly sophisticated product that will need to sustain a high transaction rate using the shipping version of Microsoft’s Entity Framework CodeFirst (4.1) which is part of Visual Studio. I keep meaning to blog my experiences because I’ve been learning a huge amount about how to use and how to abuse the product. My general style for building database intensive apps is not to spend a lot of time on optimization while I’m implementing, but to then go back and fix bottlenecks. Back in the old days (last year), I would pretty much write my own views and then reference the with LINQ2SQL which pretty much solved most of my performance issues. The reason is because views would allow me to make fairly complex queries efficiently. Making views puts you very close to the SqlServer engine and with that you have a lot of insight as to what is going on.

Well, with CodeFirst, making views is problematic because you are not actually creating your own tables and columns so going directly into the database is really not something you should do. Creating a view basically violates one of the main purposes of CodeFirst which is to remove you from having to see database details. Making a view now tightly binds your model to that view and if you change you model and not the view you are in for trouble.

The Problem

So, this long winded introduction is really about a fairly straight forward problem (one of many) that I had this morning. As I am building an email client, one thing I often do is want to retrieve a list of messages associated with a user, the address book of that user, as well as basic account information like IMAP servers and where I store the data (Azure, S3, Dropbox, etc.). So, the innocuous query with CodeFirst looks like this:

1: var userAccount =

2: db.Users

3: .Where(a => a.Username == username)

4: .Include(o => o.EmailDetails)

5: .Include(o => o.AddressBookEntries)

6: .Include(o => o.MediaStorageLocations.Select(a => a.MediaStorageLocationTypeDetail))

7: .Include(o => o.EmailAccount)

8: .FirstOrDefault();

Well, since my practice (though controversial) is to only clear my inbox about every 3 months for messages, at the moment I have 5000+ emails (small and large) in my inbox. This does however make me a good test subject for my new program. The above query takes 27 seconds to run on my inbox. Considering I want to be able to process tens of thousands of customers practically at the same time, this is not good. As it turns out, all I really need from the EmailDetails is one column and that’s the MessageUniqueId that I store from the IMAP server. This is so I don’t reprocess the same message multiple times. So, pulling out the include of EmailDetails and making that a separate Linq statement yields the following code:

1: var userAccount =

2: db.Users

3: .Where(a => a.Username == username)

4: //.Include(o => o.EmailDetails)

5: .Include(o => o.AddressBookEntries)

6: .Include(o => o.MediaStorageLocations.Select(a => a.MediaStorageLocationTypeDetail))

7: .Include(o => o.EmailAccount)

8: .FirstOrDefault();

9:

10: var emailDeatilsUniqueIds =

11: db.EmailDetails.Where(a => a.User.Username == username).Select(a => a.MessageUniqueId).ToList();

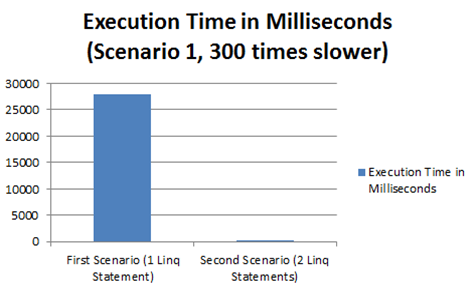

So, Here are my results comparing the old and new scenario, both using code first

Conclusion

Small things make huge differences.

I’m learning a ton. I have another scenario, which is something my application will literally do millions of time that I spent about two days optimizing (apparently not very well) using CodeFirst Linq and on my inbox, I brought the performance from taking about 2 minutes to execute down to about 3 seconds (on my local quadcore notebook). Realizing this was still a problem, I decided to give a shot at building it with a sql statement and large pile of inner and outer joins, subqueries, etc. Well, 2 hours later, this same scenario was taking 100 milliseconds to execute. As you would expect, it’s a fairly complex problem and blogging is not trivial, but the basics are that I used my codefirst database context to send a complex to the query returning it to a c# object. Something I’d rather not have done, but 3 seconds verses 100ms for something I do millions of times felt somehow worth it (though somewhat smelly).

1: addressBookEntryIds = db.Database.SqlQuery<long>(sql1).ToList();

Thoughts? Opinions? Want to hear more? Let me know.